Researchers developed a method to extend the body fee of VR by synthesizing the second eye’s view as an alternative of rendering it.

The paper is titled “You Solely Render As soon as: Enhancing Power and Computation Effectivity of Cell Digital Actuality”, and it comes from two researchers from College of California San Diego, two from College of Colorado Denver, one from College of Nebraska–Lincoln, and one from Guangdong College of Know-how.

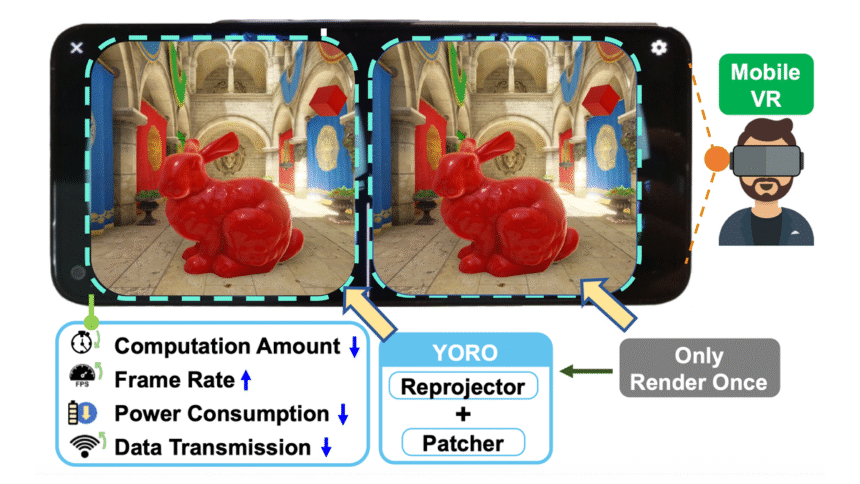

Pattern scenes operating on a smartphone.

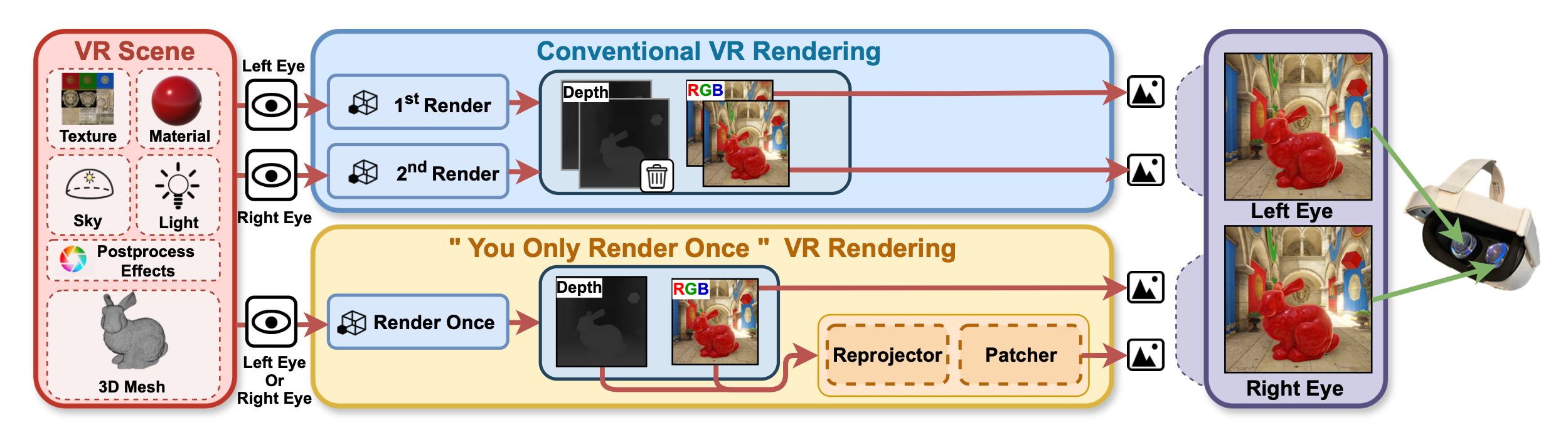

Stereoscopic rendering – the necessity to render a separate view for every eye – is among the major causes that VR video games are tougher to run than flatscreen, resulting in their decrease relative graphical constancy. This makes VR much less interesting to many mainstream avid gamers.

Whereas standalone VR headsets like Quests can optimize the CPU draw calls of stereo rendering, via a method known as Single Move Stereo, the GPU nonetheless has to render each eyes, together with the costly per-pixel shading and reminiscence overhead.

You Solely Render As soon as (YORO) is a captivating new strategy. It begins by rendering one eye, as normal. However as an alternative of rendering the opposite eye’s view in any respect, it effectively synthesizes it via two phases: Reprojection and Patching.

To be clear, YORO is not an AI system. There isn’t any neural community concerned right here, and thus there will not be any AI hallucinations.

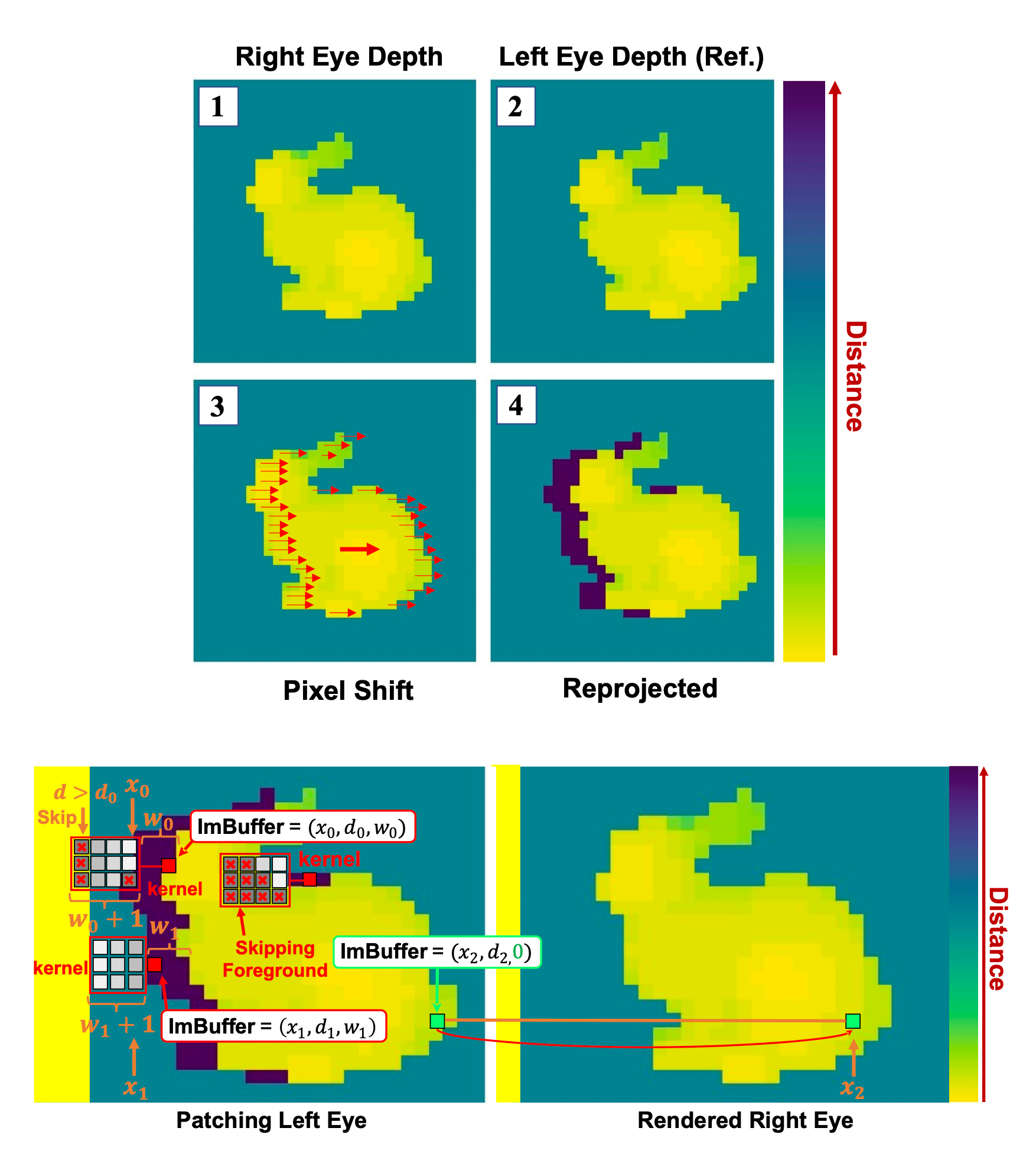

The Reprojection stage makes use of a compute shader to shift each pixel from the rendered eye into what could be the opposite eye’s display screen house. It then marks which pixels are occluded, ie. not seen from the rendered eye.

That is the place the Patching stage is available in. It is a light-weight picture shader that applies a depth-aware in-paint filter to the occluded pixels marked by the Reprojection step, blurring background pixels into them.

The researchers declare that YORO decreases GPU rendering overhead by over 50%, and that the artificial eye view is “visually lossless” normally.

In a real-world check on a Quest 2 operating Unity’s VR Newbie: The Escape Room pattern, implementing YORO elevated the body fee by 32%, from 62FPS to 82FPS.

The exception to this “visually lossless” synthesis is within the excessive near-field, which means digital objects which are very near your eyes. Stereo disparity naturally will increase the nearer an object is to your eyes, so in some unspecified time in the future the YORO strategy simply breaks down.

On this case, the researchers counsel merely falling again to common stereo rendering. This could even be performed for particular objects, ones very near your eyes, slightly than the entire scene.

A extra vital limitation of YORO is that it doesn’t assist clear geometry. Nonetheless, the researchers be aware that transparency requires a second rendering move on cellular GPUs in any case, so isn’t used, and counsel that YORO might be inserted earlier than the clear rendering move.

The supply code of a Unity implementation of YORO is on the market on GitHub, beneath the GNU Normal Public License (GPL). As such, it is theoretically already potential for VR builders to implement this of their titles. However will platform-holders like Meta, ByteDance, and Apple undertake a method much like YORO on the SDK or system degree? We’ll maintain a detailed eye on these firms in coming months and years for any indicators.