Snap OS now has in-app funds, a UI Package, a permissions system for uncooked digital camera entry, EyeConnect automated colocation, and extra.

What Are Snap OS, Snap Spectacles, And Snap Specs?

For those who’re unfamiliar, the present Snap Spectacles are $99/month AR glasses for builders ($50/month in the event that they’re college students), supposed to allow them to develop apps for the Specs shopper product the corporate behind Snapchat intends to ship in 2026.

Spectacles have a 46° diagonal discipline of view, angular decision corresponding to Apple Imaginative and prescient Professional, comparatively restricted computing energy, and a built-in battery lifetime of simply 45 minutes. They’re additionally the bulkiest AR gadget in “glasses” type issue we have seen but, weighing 226 grams. That is nearly 5 instances as heavy as Ray-Ban Meta glasses, for an admittedly solely unfair comparability.

However Snap CEO Evan Spiegel claims that the patron Specs may have “a a lot smaller type issue, at a fraction of the load, with a ton extra functionality”, whereas working all the identical apps developed up to now.

As such, what’s arguably extra necessary to maintain monitor of right here is Snap OS, not the developer package {hardware}.

Snap OS is comparatively distinctive. Whereas on an underlying degree it is Android-based, you may’t set up APKs on it, and thus builders cannot run native code or use third-party engines like Unity. As a substitute, they construct sandboxed “Lenses”, the corporate’s identify for apps, utilizing the Lens Studio software program for Home windows and macOS.

In Lens Studio, builders use JavaScript or TypeScript to work together with high-level APIs, whereas the working system itself handles the low-level core tech like rendering and core interactions. This has lots of the identical benefits because the Shared House of Apple’s visionOS: near-instant app launches, interplay consistency, and simple implementation of shared multi-user experiences with out friction. It even permits the Spectacles cellular app for use as a spectator view for nearly any Lens.

Snap OS would not help multitasking, however that is extra probably a limitation of the present {hardware} than the working system itself.

Since releasing Snap OS within the newest Spectacles package final yr, Snap has repeatedly added new capabilities for builders constructing Lenses.

In March the corporate added the power to make use of the GPS and compass heading to construct experiences for outside navigation, detect when the consumer is holding a telephone, and spawn a system-level floating keyboard for textual content entry.

Snap Spectacles See Peridots Play Collectively & Now Use GPS

Six months in, Snap’s AR Spectacles for builders and educators simply acquired large new options, together with GPS help and multiplayer Peridot.

In June, it added a set of AI options, together with AI speech to textual content for 40+ languages, the power to generate 3D fashions on the fly, and superior integrations with the visible multimodal capabilities of Google’s Gemini and OpenAI’s ChatGPT.

And final month, we went hands-on with Snap OS 2.0, which quite than simply specializing in the developer expertise, additionally rounds out Snap’s first-party software program providing as a step in direction of subsequent yr’s shopper Specs launch. The brand new OS model provides and improves first-party apps like Browser, Gallery, and Highlight, and provides a Journey Mode, to carry the AR platform nearer to being prepared for customers.

Snap OS 2.0 Brings The AR Glasses Nearer To Shopper-Prepared

Snap OS 2.0 is out now, including and bettering first-party apps like Browser, Gallery, and Highlight to carry the AR platform nearer to being prepared for customers.

At Lens Fest this week, Snap detailed the brand new developer capabilities of Snap OS 2.0, together with the power for builders to monetize their Lenses.

Commerce Package

Snap has repeatedly confirmed that every one Lenses constructed for the present Spectacles developer package will run on its shopper Specs subsequent yr. However till now it hasn’t actually advised builders why they’d wish to construct Lenses within the first place.

Commerce Package is an API and fee system for Snap OS units. Customers arrange a fee methodology and 4-digit pin within the Spectacles smartphone app, and builders can implement microtransactions of their Lenses. It is similar to what you’d discover on standalone VR headsets and conventional consoles.

Provided that Lenses themselves can solely be free, that is the primary means for Lens builders to instantly monetize their work.

Commerce Package is presently a closed beta accessible for US-based builders to use to affix.

Permission Alerts

Snap OS Lenses can’t concurrently entry the web and uncooked sensor information – digital camera frames, microphone audio, or GPS coordinates. It has been a elementary a part of Snap’s software program design.

There are higher-level Snap APIs for laptop imaginative and prescient, speech to textual content, and colocation, to be clear, but when builders wish to run totally customized code on uncooked sensor information they lose entry to the web.

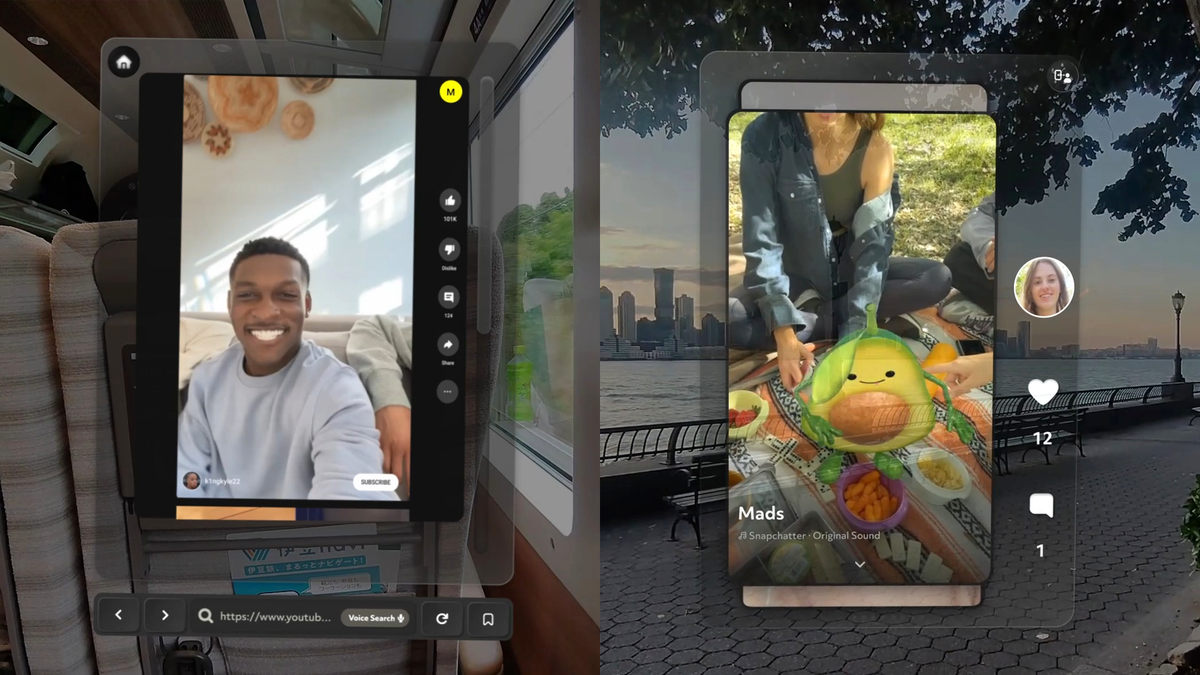

The immediate (left) and what bystanders see (proper).

Now, Snap has added the power for experimental Lenses to get each uncooked sensor entry and web entry. The catch is {that a} permissions immediate will seem each time the Lens is launched, and the forward-facing LED on the glasses will pulse whereas the app is in use.

Snap says this Bystander Indicator is essential to let folks close by know that they is likely to be being recorded.

UI Package

The Spectacles UI Package (SUIK) lets builders simply add the identical UI parts because the Snap OS system.

Cellular Package

The brand new Spectacles Cellular Package lets iOS and Android smartphone apps talk with Spectacles Lenses by way of Bluetooth.

Snap OS already lets builders simply use your iPhone as a 6DoF tracked controller by way of the Spectacles telephone app, however the brand new Cellular Package permits builders to construct customized companion telephone apps, or add this as a characteristic to their present smartphone app.

“Ship information from cellular purposes equivalent to well being monitoring, navigation, and gaming apps, and create prolonged augmented actuality experiences which are arms free and don’t require Wi-Fi.”

Semantic Hit Testing

Semantic Hit Testing is a brand new API that lets Snap OS Lenses forged a ray, for instance from the consumer’s hand, and work out whether or not it hits a sound floor floor.

This can be utilized for fast placement of digital objects, and is a developer characteristic we have seen arrive on a number of XR platforms now.

Snap Cloud

Snap Cloud is a back-end-as-a-service platform for Snap OS builders, delivered by way of a partnership with Supabase.

The service supplies authentication by way of Snap identification, databases, serverless features, real-time information communication and synchronization, and CDN object storage.

Snap Cloud is presently an Alpha launch that requires builders to use to be authorized on a case-by-case foundation.

EyeConnect

Snap OS already has the perfect system-level colocation help we have seen but, letting you simply be part of an area multiplayer session with little or no friction. When somebody close by is in a Lens that helps multiplayer, you may see their session listed in the principle OS menu to affix, and after a brief calibration step you are colocated.

With the brand new EyeConnect characteristic, Snap appears to be pushing even tougher on the concept of low-friction colocated multiplayer.

In accordance with Snap, EyeConnect directs Spectacles wearers to easily take a look at one another, and makes use of gadget and face monitoring to robotically co-locate all customers in a session with none mapping step.

For Lenses that place digital components at actual world coordinates, a Customized Areas system is used that does not help EyeConnect.