Google has launched its second Developer Preview of the Android XR software program improvement equipment (SDK) following its preliminary launch late final yr, introducing new options and enhancements together with wider assist for immersive video, higher adaptive UI layouts, hand-tracking in ARCore for Jetpack, and extra.

Introduced at Google I/O, the billet of updates to Android XR SDK is geared toward giving builders extra standardized instruments to both make their very own XR-native apps, or convey their customary Android apps to headsets.

This now contains assist for 180° and 360° stereoscopic video playback utilizing the MV-HEVC format, which is likely one of the hottest codecs optimized for high-quality 3D immersive video.

Google introduced that Android XR Developer Preview 2 additionally now has Jetpack Compose for XR, which permits adaptive UI layouts throughout XR shows utilizing instruments like SubspaceModifier and SpatialExternalSurface. Jetpack Compose is Google’s declarative UI toolkit, which goals to standardize UI design throughout cell, pill, and immersive headsets.

A serious replace in ARCore for Jetpack XR is the introduction of hand-tracking, which incorporates 26 posed joints for gesture-based interactions. Builders can now discover an up to date samples, benchmarks, and guides to assist combine hand-tracking into apps.

Materials Design for XR has additionally been expanded, which Google says will assist “large-screen enabled apps [to] effortlessly adapt to the brand new world of XR.”

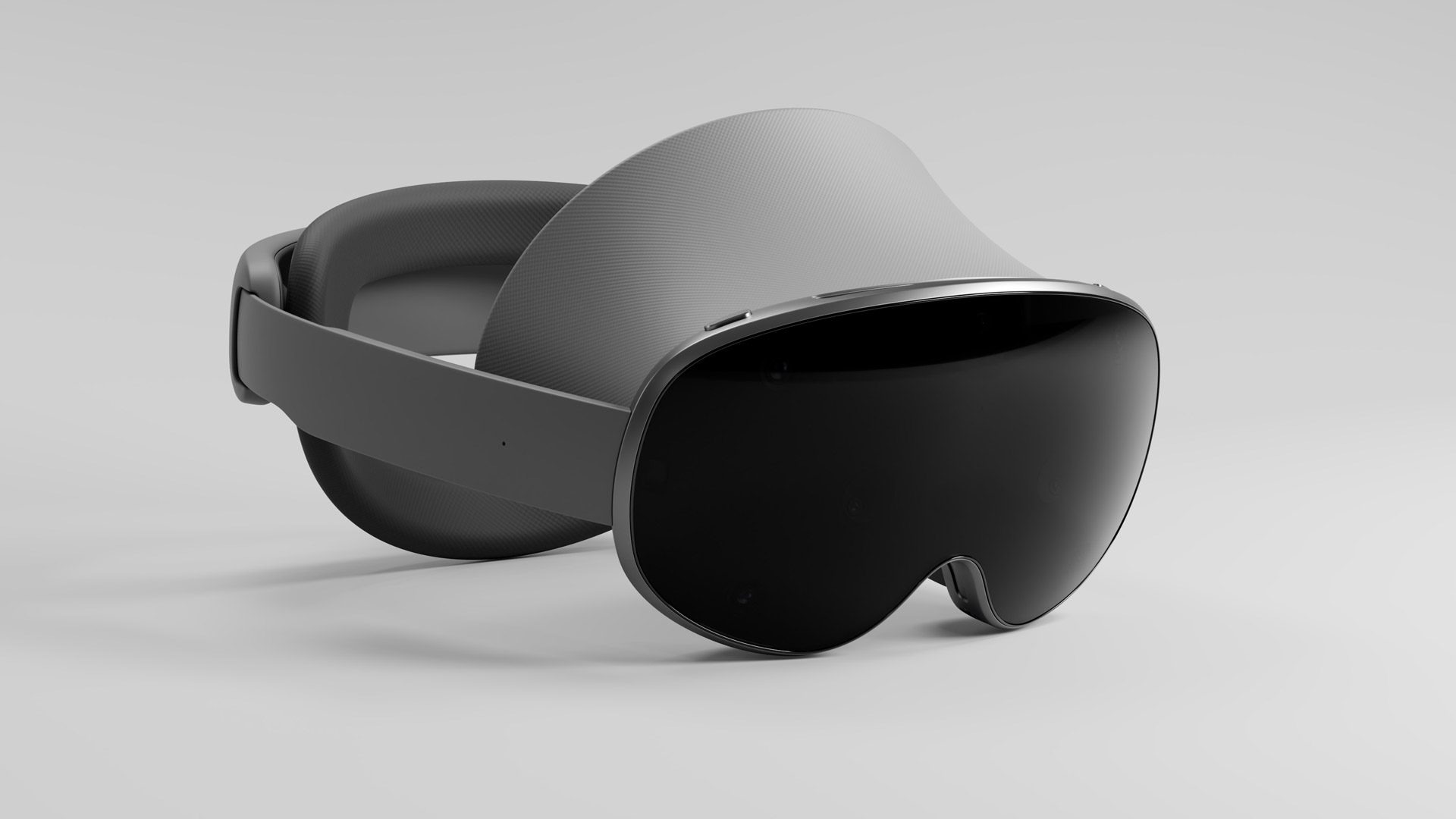

Nonetheless, a majority of Android XR builders possible don’t have entry to official Android XR headsets, which can embrace combined actuality headset Samsung Venture Moohan and AR glasses XREAL Venture Aura after they launch later this yr—making its Android XR Emulator an indispensable device.

Google says Developer Preview 2 now has improved Android XR Emulator by together with AMD GPU assist, higher stability, and tighter integration in Android Studio, which ought to assist with XR app testing and improvement workflows.

Unity, the preferred sport engine for XR improvement, additionally now provides entry to Pre-Launch model 2 of the Unity OpenXR, which brings vital efficiency bumps with Dynamic Refresh Fee and SpaceWarp assist by Shader Graph. Unity’s improved Blended Actuality template additionally now options lifelike hand mesh occlusion and protracted anchors.

Moreover, Android XR Samples for Unity have been launched, showcasing options like hand monitoring, aircraft monitoring, face monitoring, and passthrough to provide builders a kickstart on integrating these options in their very own Android XR apps.

That stated, whereas Android XR wasn’t a precisely a large headliner at this yr’s Google I/O, the corporate is transferring ahead by not solely releasing Android XR to extra companion units, but additionally by its releasing Android XR sensible glasses from eyewear makers Warby Parker and Light Monster sooner or later.

Google says it’s releasing two most important variations of its Android XR glasses: one strikingly related in operate to Ray-Ban Meta Glasses, in addition to one with a on-board shows for fundamental duties like studying textual content, viewing images and movies, and navigation.

You’ll be able to study extra about Android XR Developer Preview right here, which incorporates extra element on all at present accessible instruments and updates.