Meta’s net browser now makes use of depth sensing for WebXR Hit Testing on Quest 3 & 3S, enabling prompt blended actuality object placement with no Scene Mesh.

The WebXR Hit Testing API lets builders solid a conceptual ray from an origin, such because the person’s head or controller, and discover the place it first intersects real-world geometry. The API is part of the WebXR open customary, however the way it works on the underlying technical stage will fluctuate between gadgets.

Beforehand on Quest 3 and Quest 3S, in WebXR the headset would use the Scene Mesh generated by the blended actuality setup course of to find out which real-world geometry the raycast hits. However that strategy had a number of issues. If the person hadn’t arrange a mesh for the room they’re in they’d want to take action if the developer known as the hit testing API, including vital friction. And even when they’d a Scene Mesh, it would not mirror moved furnishings or different modifications for the reason that scan.

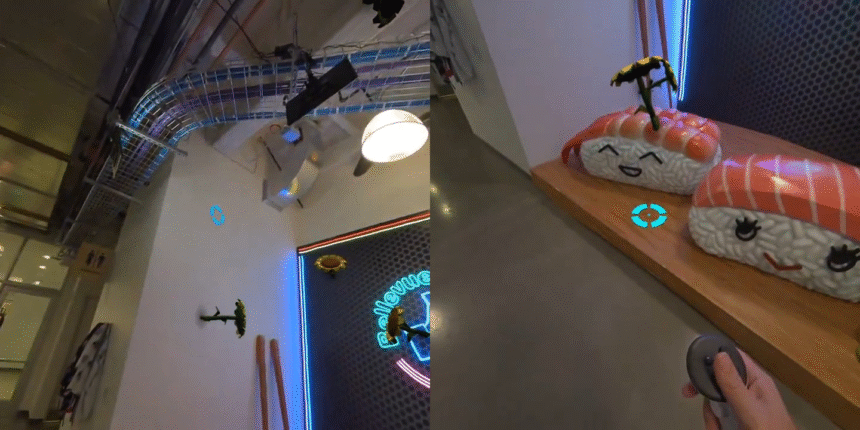

Demo clip from Meta engineer Rik Cabanier.

With Horizon Browser 40.4, rolling out now, the WebXR Hit Testing API now makes use of Meta’s Depth API underneath the hood, not the Scene Mesh.

Supported on Quest 3 and Quest 3S, the Depth API offers real-time first individual depth frames, generated by a pc imaginative and prescient algorithm which compares the disparity from the 2 monitoring cameras on the entrance. It really works as much as round 5 meters distance, and is often used to implement dynamic occlusion in blended actuality, since you may decide whether or not digital objects needs to be occluded by bodily geometry.

Meta SDKs Get Prompt Placement, Keyboard Cutout, Colocation Discovery

Meta’s Quest SDKs now allow putting digital objects on surfaces with no scene scan, exhibiting a passthrough cutout of any keyboard, and discovering close by headsets for colocation by way of Bluetooth.

For hit testing, the Depth API permits immediately putting digital objects on actual world surfaces with out the necessity for a Scene Mesh. This functionality has been simply obtainable to Unity builders for round a yr as a part of Meta’s Combined Actuality Utility Equipment (MRUK), and Unreal and native builders can implement it themselves utilizing the Depth API. Now, it is simply obtainable for WebXR builders too.

Remember, nevertheless, that that is solely acceptable for spawning easy stationary objects and interfaces. If entities want to maneuver round surfaces or work together with any of the remainder of the room, a scanned Scene Mesh will nonetheless be wanted.