The newest model of Meta’s SDK for Quest provides thumb microgestures, letting you swipe and faucet your index finger, and improves the standard of Audio To Expression.

The replace additionally consists of helper utilities for passthrough digicam entry, the characteristic Meta simply launched to builders, which, if the consumer grants permission, offers apps entry to the headset’s forward-facing coloration cameras, together with metadata just like the lens intrinsics and headset pose, which they will leverage to run customized pc imaginative and prescient fashions.

Quest Passthrough Digital camera API Out Now For Builders To Play With

Quest’s extremely anticipated Passthrough Digital camera API is now obtainable for all builders to experiment with, although they will’t but embrace it in retailer app builds.

Thumb Microgestures

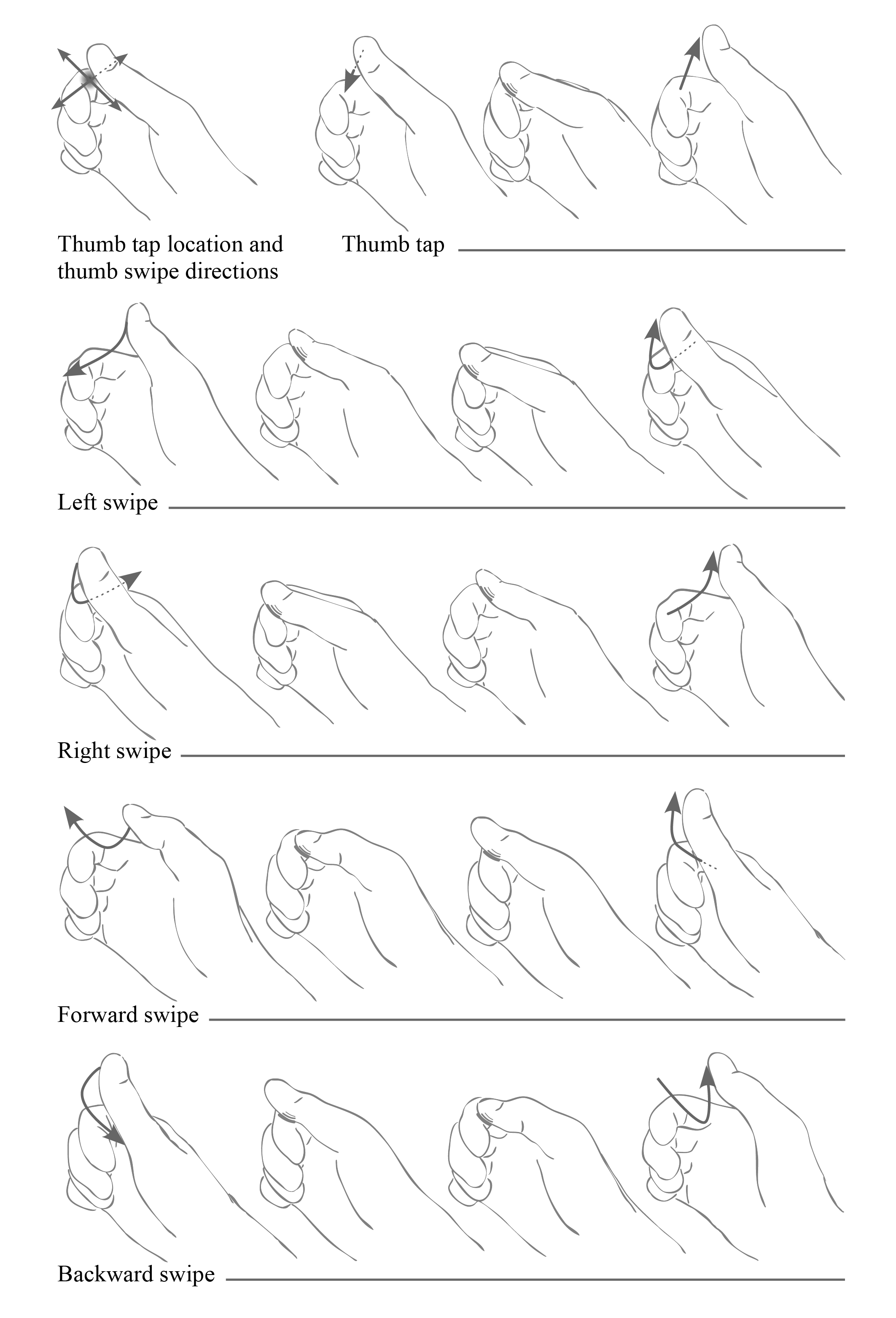

Microgestures leverages the controller-free hand monitoring of Quest headsets to detect your thumb tapping and swiping in your index finger, as if it had been a Steam Controller D-pad, when your hand is sideways and your fingers are curled.

For Unity, microgestures requires the Meta XR Core SDK. For different engines it is obtainable through the OpenXR extension XR_META_hand_tracking_microgestures.

Meta says these microgestures may very well be used to implement teleportation with snap turning with out the necessity for controllers, although how microgestures are literally used is as much as builders.

Builders may, for instance, use them as a much less straining option to navigate an interface, with out the necessity to attain out or level at components.

Curiously, Meta additionally makes use of this thumb microgesture strategy for its in-development sEMG neural wristband, the enter gadget for its eventual AR glasses, and reportedly additionally probably for the HUD glasses it plans to ship later this yr.

This implies that Quest headsets may very well be used as a growth platform for these future glasses, leveraging thumb microgestures, although eye monitoring could be required to match the complete enter capabilities of the Orion prototype.

Meta Plans To Launch “Half A Dozen Extra” Wearable Gadgets

In Meta’s CTO’s leaked memo, he referenced the corporate’s plan to launch “half a dozen extra AI powered wearables”.

Improved Audio To Expression

Audio To Expression is an on-device AI mannequin, launched in v71 of the SDK, that generates believable facial muscle actions from solely microphone audio enter, offering estimated facial expressions with none face monitoring {hardware}.

Audio To Expression changed the ten yr previous Oculus Lipsync SDK. That SDK solely supported lips, not different facial muscle tissue, and Meta claims Audio To Expression truly makes use of much less CPU than Oculus Lipsync did.

Watch Meta’s Audio To Expression Quest Characteristic In Motion

Right here’s Meta’s new ‘Audio To Expression’ Quest SDK characteristic in motion, in comparison with the previous Oculus Lipsync.

Now, the corporate says v74 of the Meta XR Core SDK brings an upgraded mannequin that “improves all facets together with emotional expressivity, mouth motion, and the accuracy of Non-Speech Vocalizations in comparison with earlier fashions”.

Meta’s personal Avatars SDK nonetheless would not use Audio To Expression, although, and nor does it but use inside-out physique monitoring.