Might AI “world fashions” might be probably the most viable path to completely photorealistic interactive VR?

A lot of the “digital actuality” seen in science fiction entails placing on a headset, or connecting a neural interface, to enter an interactive digital world that appears and behaves as if completely actual. In distinction, whereas high-end VR of right now can have comparatively life like graphics, it is nonetheless very clearly not actual, and these digital worlds take years of improvement and lots of of hundreds or hundreds of thousands of {dollars} to construct. Worse, mainstream standalone VR has graphics that in the very best circumstances appears to be like like an early PS4 sport, and on common appears to be like nearer to late-stage PS2.

With every new Quest headset era, Meta and Qualcomm have delivered a doubling of GPU efficiency. Whereas spectacular, this path will take a long time to attain even the efficiency of right now’s PC graphics playing cards, by no means thoughts getting wherever close to photorealism, and far of the longer term good points shall be spent on rising decision. Methods like eye-tracked foveated rendering and neural upscaling will assist, however can solely go thus far.

Gaussian splatting does enable for photorealistic graphics on standalone VR, however splats characterize solely a second in time, and should be captured from the real-world or pre-rendered as 3D environments within the first place. Including real-time interactivity requires a hybrid method that includes conventional rendering.

However there could also be a completely completely different path to photorealistic interactive digital worlds. One far stranger, and fraught with its personal points, but probably way more promising.

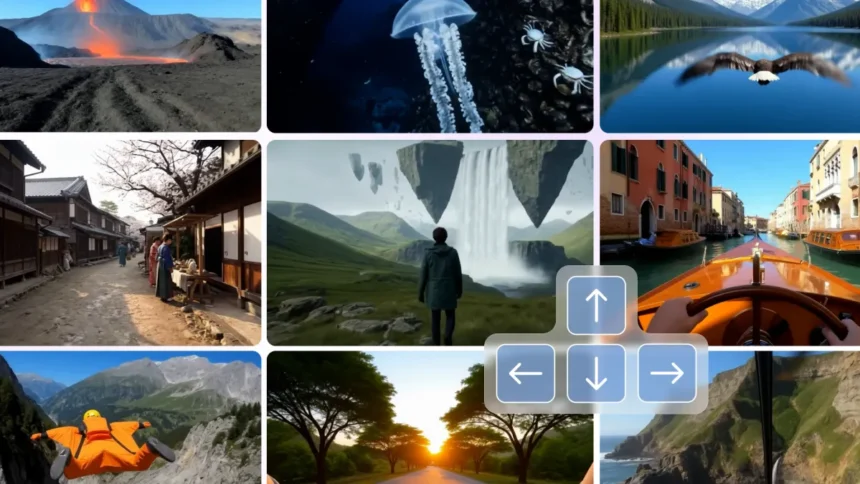

Yesterday, Google DeepMind revealed Genie 3, an AI mannequin that generates a real-time interactive video stream from a textual content immediate. It is primarily a near-photorealistic online game however the place every body is completely AI-generated, with no conventional rendering or picture enter concerned in any respect.

Google calls Genie 3 a “world mannequin”, however it may be described as an interactive video mannequin. The preliminary enter is a textual content immediate, the real-time enter is mouse and keyboard, and the output is a video stream.

What’s outstanding concerning the Genie collection, as with many different generative AI programs, is the staggering tempo of progress.

Revealed in early 2024, the unique Genie was primarily targeted on producing 2D side-scrollers at 256×256 decision, and will solely run for just a few dozen frames earlier than the world would glitch and smear into an inconsistent mess, therefore why the pattern clips proven have been solely a second or two lengthy.

Genie 1, from February 2024.

Then, in December, Genie 2 surprised the AI business by reaching a world mannequin for 3D graphics, with first-person or third-person management through normal mouse-look and WASD or arrow key controls. It output at 360p 15fps and will run for round 10-20 seconds, after which the world begins to lose coherence.

Genie 2’s output was additionally blurry and low-detail, with the distinctly AI-generated look you would possibly acknowledge from the older video era fashions of some years in the past.

Genie 2 from December (left) vs Genie 3

Genie 3 is a major leap ahead. It outputs extremely life like graphics at 720p 24fps, with environments remaining totally constant for 1 minute, and “largely” constant for “a number of minutes”.

In the event you’re nonetheless not fairly certain what Genie 3 does in apply, let me spell it out clearly: you kind an outline of the digital world you need, and inside just a few seconds it seems on display screen, navigable by normal keyboard and mouse motion controls.

And these digital worlds are usually not static. Doorways will open once you method them, dynamic shadows will exist for transferring objects, and you’ll even see physics interactions like splashes and ripples in water as objects disturb it.

On this demo, you’ll be able to see the character’s boots disturbing puddles on the bottom.

Arguably probably the most fascinating facet of Genie 3 is that these behaviors are emergent from the underlying AI mannequin developed throughout coaching, not preprogrammed. Whereas human builders typically spend months implementing simulations of only one facet of physics, Genie 3 merely has this information baked in. That is why Google calls it a “world mannequin”.

Extra concerned interactivity might be achieved by specifying the interactions within the immediate.

In a single instance clip, the immediate “POV motion digicam of a tan home being painted by a primary individual agent with a paint curler” was entered to primarily generate a photorealistic wall portray mini-game.

Immediate: “POV motion digicam of a tan home being painted by a primary individual agent with a paint curler”

Genie 3 additionally provides help for “promptable world occasions”, from altering the climate to including new objects and characters.

These occasion prompts may come from the participant, for instance by voice enter, or be pre-scheduled by a world creator.

This might someday enable for a near-infinite number of new content material and occasions in digital worlds, in distinction to the weeks or months it takes a standard improvement staff to ship updates.

Genie 3’s “promptable world occasions” in motion.

After all, 720p 24fps is way under what trendy avid gamers anticipate, and gameplay classes final lots longer than a minute or two. Given the tempo of progress, although, these fundamental technical limitations will seemingly fade away in coming years.

In relation to adapting a mannequin like Genie 3 for VR, different extra mundane points emerge.

The mannequin would at minimal have to take a 6DoF head pose as enter, in addition to directional motion, and ideally would want to include your fingers and even physique pose, until you simply need to stroll world wide with out straight interacting with any object.

None of that’s unattainable in concept, however would seemingly require a a lot wider coaching dataset and important architectural modifications to the mannequin.

It might additionally after all have to output stereoscopic imagery. However the different eye might be synthesized, both with AI view synthesis or conventional strategies like YORO.

Latency may be a priority, however Google claims Genie 3 has an end-to-end management latency of fifty milliseconds, which is surprisingly near the 41.67 ms theoretical minimal for a 24 fps flatscreen sport. If a future mannequin can run at 90 fps, mixed with VR reprojection this should not be a problem.

Google additionally notes that Genie 3’s motion area is restricted, it can not mannequin advanced interactions between a number of impartial brokers, it can not simulate real-world places with good geographic accuracy, and clear and legible textual content is usually solely generated when supplied within the textual content immediate. It describes these points as “ongoing analysis challenges”.

Nevertheless, there’s one other way more elementary situation with AI “world fashions” like Genie 3 that can restrict their scope, and it is why conventional rendering will not be going away any time quickly.

That situation known as steerability – how carefully the output matches the main points of your textual content immediate.

You’ve got seemingly seen spectacular examples of extremely life like AI picture era lately, and of AI video era (comparable to Google DeepMind’s Veo 3) in current months. But when you have not used them your self, chances are you’ll not understand that whereas these fashions will comply with your directions in a common sense, they will typically fail to match particulars you specify.

Additional, if their output contains one thing you do not need, even adjusting the immediate to take away it should typically fail. For example, I lately requested Veo 3 to generate a video involving somebody holding a hotdog with solely ketchup, no mustard. However regardless of how sternly I harassed that element, the mannequin wouldn’t generate a hotdog with out mustard.

In historically rendered video video games, you see precisely what the builders supposed you to see. The minute particulars of the artwork course and elegance create a singular really feel to the digital world, typically painstakingly achieved by years of refinement.

In distinction, the output of AI fashions comes from a latent area formed by patterns within the coaching information. The textual content immediate is nearer to a hyperdimensional coordinate than a really understood command, and thus won’t ever match precisely what an artist had in thoughts. This turns into even trickier when promptable world occasions get entangled.

After all, the steerability of AI world fashions will enhance over time too. But it surely’s a far more durable problem than simply boosting the decision and reminiscence horizon, and will by no means enable the sort of exact management of a standard sport engine.

Immediate: “A classroom the place on the blackboard on the entrance of the room it says GENIE-3 MEMORY TEST and beneath is a lovely chalk image of an apple, a mug of espresso, and a tree. The classroom is empty apart from this. Outdoors the window are bushes and some automobiles driving previous.”

Nonetheless, even with the steerability situation, it could be silly to not see the enchantment of eventual photorealistic interactive VR worlds you can conjure into existence by merely talking or typing an outline. AI world fashions appear uniquely nicely positioned to ship on the promise of Star Trek’s Holodeck, extra so than even AI-generating property for conventional rendering.

To be clear, we’re nonetheless within the early days of AI “world fashions”. There are a selection of main challenges to unravel, and it’ll seemingly be a few years till a VR-capable one that may run for hours arrives in your headset. However the tempo of progress right here is gorgeous, and the potential is tantalizing. That is an space of analysis we’ll be paying very shut consideration to.