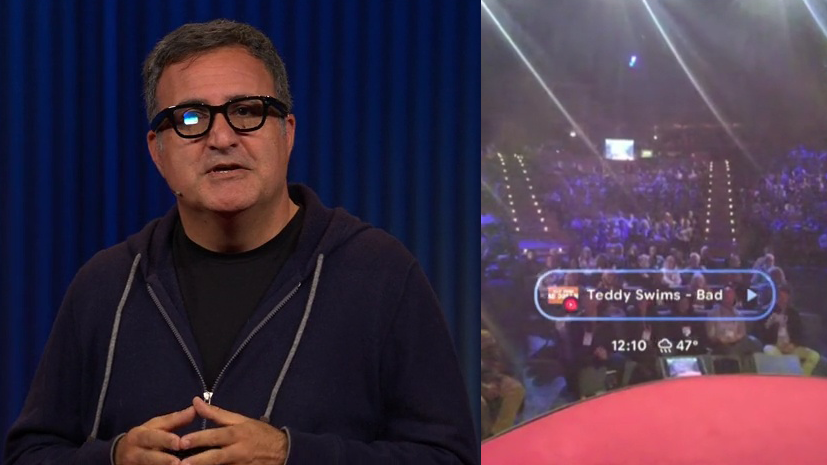

At TED2025 Google confirmed off glossy sensible glasses with a HUD, although the corporate described them as “ conceptual {hardware}”.

Shahram Izadi, Google’s Android XR lead, took to the TED stage earlier this month to point out off each the HUD glasses and Samsung’s upcoming XR headset, and the 15-minute speak is now publicly obtainable to look at.

A supercut of the TED2025 demo.

The glasses characteristic a digicam, microphones, and audio system, just like the Ray-Ban Meta glasses, but additionally have a “tiny excessive decision in lens show that is full shade”. The show seems to be monocular, refracting gentle in the appropriate lens when seen from sure digicam angles through the demo, and has a comparatively small area of view.

The demo focuses on Google’s Gemini conversational AI system, together with the Mission Astra functionality which lets it keep in mind what it sees by way of “constantly encoding video frames, combining the video and speech enter right into a timeline of occasions, and caching this info for environment friendly recall”.

This is every part Izadi and his colleague Nishtha Bhatia exhibit within the demo:

- Primary Multimodal: Bhatia asks Gemini to write down a haiku primarily based on what she’s seeing, whereas trying on the viewers, and it responds “Faces all aglow. Keen minds await the phrases. Sparks of thought ignite”

- Rolling Contextual Reminiscence: after trying away from a shelf, which incorporates objects together with a guide, Bhatia asks Gemini what the title is of “the white guide that was on the shelf behind me”, and it solutions accurately. She then tries a more durable query, asking merely the place her “resort keycard” is, with out giving the clue concerning the shelf. Gemini accurately solutions that it is to the appropriate of the music report.

- Advanced Multimodal: holding open a guide, Bhatia asks Gemini what a diagram means, and it solutions accurately.

- Translation: Bhatia appears to be like at a Spanish signal, and with out telling Gemini what language it’s, asks Gemini to translate it to English. It succeeds. To show that the demo is stay, Izadi then asks the viewers to select one other language, somebody picks Farsi, and Gemini efficiently interprets the signal to Farsi too.

- Multi-Language Assist: Bhatia speaks to Gemini in Hindi, while not having to alter any language “mode” or “setting”, and it responds immediately in Hindi.

- Taking Motion (Music): for example of how Gemini on the glasses can set off actions in your telephone, Bhatia appears to be like at a bodily album she’s holding and tells Gemini to play a observe from it. It begins the music on her telephone, streaming it to the glasses by way of Bluetooth.

- Navigation: Bhatia asks Gemini to “navigate me to a park close by with views of the ocean”. When she’s trying straight forwards, she sees a 2D turn-by-turn instruction, whereas when trying downwards she sees a 3D (although fastened) minimap exhibiting the journey route.

Google Teases AI Sensible Glasses With A HUD At I/O 2024

Google teased multimodal AI sensible glasses with a HUD at I/O 2024.

This is not the primary time Google has proven off sensible glasses with a HUD, and it isn’t even the primary time stated demo has centered on Gemini’s Mission Astra capabilities. At Google I/O 2024, nearly one 12 months in the past, the corporate confirmed a brief prerecorded demo of the expertise.

Final 12 months’s glasses have been notably bulkier than what was proven at TED2025, nonetheless, suggesting the corporate is actively engaged on miniaturization with the aim of delivering a product.

Nevertheless, Izadi nonetheless describes what Google is exhibiting as “ conceptual {hardware}”, and the corporate hasn’t introduced any particular product, nor a product timeline.

In October The Info’s Sylvia Varnham O’Regan reported that Samsung is engaged on a Ray-Ban Meta glasses competitor with Google Gemini AI, although it is unclear whether or not this product can have a HUD.

Meta HUD Glasses Worth, Options & Enter Machine Reportedly Revealed

A brand new Bloomberg report particulars the worth and options of Meta’s upcoming HUD glasses, and claims that Meta’s neural wristband might be within the field.

If it does have a HUD, it will not be alone in the marketplace. Along with the dozen or so startups which confirmed off prototypes at CES, Mark Zuckerberg’s Meta reportedly plans to launch its personal sensible glasses with a HUD later this 12 months.

Just like the glasses Google confirmed at TED2025, Meta’s glasses reportedly have a small show in the appropriate eye, and a robust deal with multimodal AI (in Meta’s case, the Llama-powered Meta AI).

In contrast to Google’s glasses although, which gave the impression to be primarily managed by voice, Meta’s HUD glasses will reportedly even be controllable by way of finger gestures, sensed by an included sEMG neural wristband.

Apple too is reportedly engaged on sensible glasses, with obvious plans to launch a product in 2027.

Apple Exploring Releasing Sensible Glasses In 2027

Apple appears to be exploring making sensible glasses, and reportedly might ship a product in 2027.

All three firms are seemingly hoping to construct on the preliminary success of the Ray-Ban Meta glasses, which not too long ago handed 2 million models bought, and can see their manufacturing vastly elevated.

Count on competitors in sensible glasses to be fierce in coming years, because the tech giants battle for management of the AI that sees what you see and hears what you hear, and the flexibility to mission photos into your view at any time.