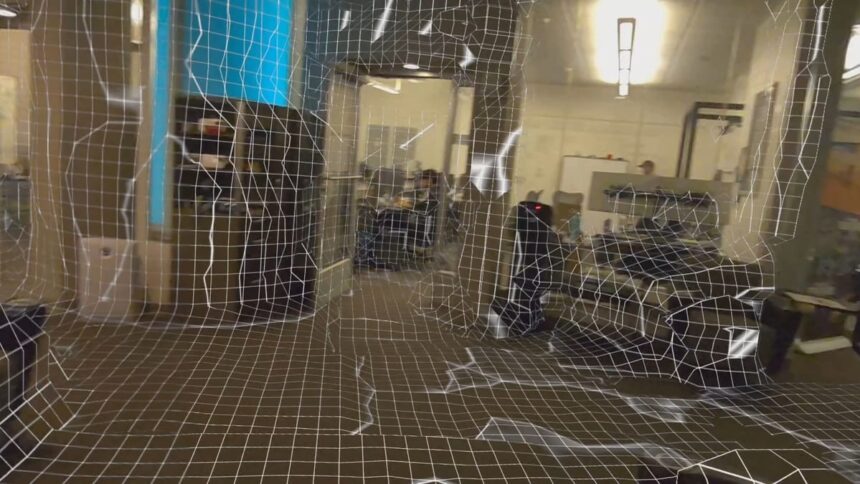

Lasertag’s developer carried out steady scene meshing on Quest 3 & 3S, eliminating the necessity for the room setup course of and avoiding its issues.

Quest 3 and Quest 3S allow you to scan your room to generate a 3D scene mesh that blended actuality apps can use to permit digital objects to work together with bodily geometry or reskin your atmosphere. However there are two main issues with Meta’s present system.

The primary drawback is that it requires you to have carried out the scan within the first place. This takes anyplace from round 20 seconds to a number of minutes of wanting and even strolling round, relying on the scale and form of your room, including vital friction in comparison with simply launching instantly into an app.

The opposite drawback is that these scene mesh scans symbolize solely a second in time, while you carried out the scan. If furnishings has moved or objects have been added or faraway from the room since then, these adjustments will not be mirrored in blended actuality except the consumer manually updates the scan. For instance, if somebody was standing within the room with you throughout the scan, their physique form is baked into the scene mesh.

Steady scene meshing in beta Lasertag construct.

Quest 3 and Quest 3S additionally supply one other method for apps to acquire details about the 3D construction of your bodily atmosphere, although, the Depth API.

The Depth API supplies real-time first individual depth frames, generated by the headset by evaluating the disparity from the 2 monitoring cameras on the entrance. It really works as much as round 5 meters distance, and is usually used to implement dynamic occlusion in blended actuality, since you’ll be able to decide whether or not digital objects needs to be occluded by bodily geometry.

An instance of a sport that makes use of the Depth API is Julian Triveri’s colocated multiplayer blended actuality Quest 3 sport Lasertag. In addition to for occlusion, the general public construct of Lasertag makes use of the Depth API to find out in every body whether or not your laser ought to collide with actual geometry or hit your opponent. It does not use Meta’s scene mesh, as a result of Triveri did not wish to add the friction of the setup course of or be restricted by what was baked into the mesh.

And the beta launch channel of Lasertag goes a lot additional than this.

Within the beta launch channel, Triveri makes use of the depth frames to assemble, over time, a 3D quantity texture on the GPU representing your bodily atmosphere. That implies that, regardless of nonetheless not needing any type of preliminary setup, this model of Lasertag can simulate laser collisions even for actual world geometry you are not presently instantly , so long as you have checked out it earlier than. In an inside construct, Triveri may convert this right into a mesh utilizing an open-source Unity implementation of the marching cubes algorithm.

In earlier experiments, Triveri even experimented with networked heightmapping. In these checks, every headset within the session shared their constantly constructed heightmap, derived from the depth frames, with the opposite headsets as they constructed it, which means everybody received a end result that is the sum of what every headset has scanned. This is not presently obtainable, and relied on older underlying methods that Triveri does not presently plan to convey ahead. But it surely’s nonetheless an attention-grabbing experiment that future blended actuality programs might discover.

Earlier experimentation of networked steady heightmapping.

So why does not Meta do steady meshing as a substitute of its present room scanning system?

On Apple Imaginative and prescient Professional and Pico 4 Extremely, that is already how scene meshing works. On these headsets, there is no such thing as a particular room setup course of, and the headset constantly scans the atmosphere within the background and updates the mesh. However the cause they’ll do that is that they’ve hardware-level depth sensors, whereas Quest 3 and Quest 3S use computationally costly laptop imaginative and prescient algorithms to derive depth (in Quest 3’s case, assisted by a projected IR sample).

Utilizing the Depth API in any respect has a notable CPU and GPU price, which is why many Quest blended actuality apps nonetheless haven’t got dynamic occlusion. And utilizing these depth frames to assemble a mesh is much more computationally costly.

Basically, Lasertag trades off efficiency for the benefit of steady scene understanding and not using a setup course of. And this is the reason Quest 3 and 3S do not do that for the official scene meshing system.

Lasertag beta gameplay.

In January Meta indicated that it plans to ultimately make scene meshes routinely replace to mirror adjustments, however the wording given appears like it’s going to nonetheless require the preliminary setup course of as a baseline.

Lasertag is on the market totally free on the Meta Horizon platform for Quest 3 and Quest 3S headsets. The general public model makes use of the present depth body for laser collisions, whereas the pre-release beta constructs a 3D quantity over time.