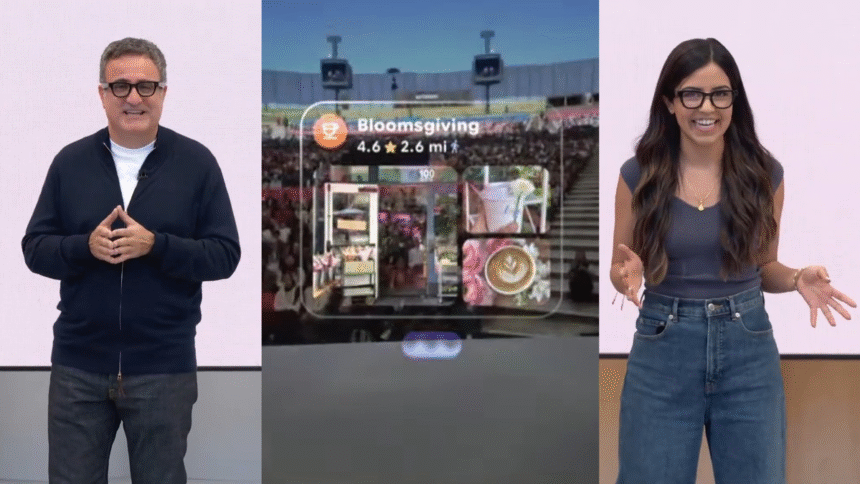

At Google I/O 2025, the corporate gave one other on-stage demo of “prototype” sensible glasses with a HUD.

We are saying one other as a result of the identical duo, Shahram Izadi (Google’s Android XR lead) and Nishtha Bhatia (a Google PM), gave a really related demo on stage at TED2025 final month.

Google Reveals Off Smooth AI Good Glasses With A HUD

At TED2025 Google confirmed off smooth sensible glasses with a HUD, although the corporate described them as ” conceptual {hardware}”.

The TED demo targeted nearly completely on Gemini AI capabilities, in addition to navigation, and the brand new I/O demo strongly focuses on Gemini too. However it additionally showcased some wider performance, together with receiving and replying to message notifications and taking pictures.

Izadi and Bhatia completed the demo by making an attempt real-time speech translation, a characteristic Meta rolled out to the Ray-Ban Meta glasses final month. Whereas it partially labored, the casted view from Bhatia’s glasses froze midway by way of.

“We mentioned it is a dangerous demo”, Izadi remarked.

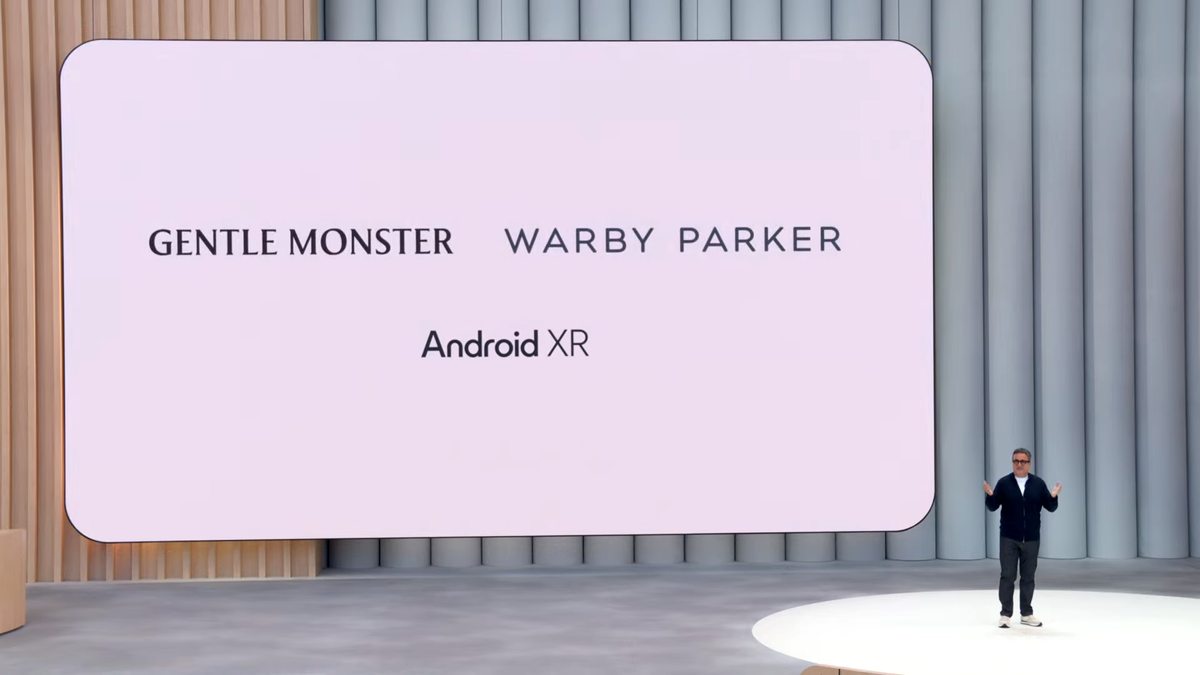

Google Working With Mild Monster & Warby Parker On Gemini Good Glasses

Google is working with Mild Monster and Warby Parker on sensible glasses with Gemini AI, the corporate’s plan to straight compete with Ray-Ban Meta.

What’s actually new at I/O in comparison with TED although is the announcement of precise merchandise. Google says it is working with Mild Monster and Warby Parker, rising rivals to Ray-Ban and Oakley proprietor EssilorLuxottica, mirroring Meta’s technique to ship sensible glasses in trendy designs.

Nonetheless, Google described what was proven on stage at I/O as “prototype” {hardware}, mentioned that together with a show is “optionally available” for precise merchandise, and didn’t say whether or not the primary Mild Monster and Warby Parker merchandise will embrace a HUD. It is doable they will be extra primary units like Ray-Ban Meta glasses.

If any of those glasses do have a HUD, they will not be alone in the marketplace. Along with the prevailing Even Realities G1 and Body glasses, Mark Zuckerberg’s Meta reportedly plans to launch its personal sensible glasses with a HUD later this yr. Not like Google’s glasses although, which seemed to be primarily managed by voice, Meta’s HUD glasses will reportedly even be controllable by way of finger gestures, sensed by an included sEMG neural wristband.

Apple too is reportedly engaged on sensible glasses, with obvious plans to launch a product in 2027.